Journal of Shanghai University >

Video colourisation based on voxel flow

Received date: 2019-01-13

Online published: 2021-02-28

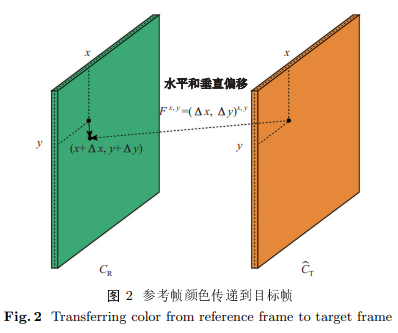

Video colourisation methods that transfer colour information in keyframes based on traditional optical flow are time-consuming, while those relying on global colour transfer are prone to desaturation. This paper proposes a new video colourisation method based on voxel flow. In the proposed method, the reference and target images are both converted to the lab colour space, before a double-channel voxel flow is obtained by feeding the luminance channels of the images into a neural network. The voxel flow values indicate the positional colour correspondence between the target frame and the reference frame. Then, the colour of the target frame is obtained by bilinear interpolation of the reference frame utilising the voxel flow. Finally, the colour and luminance channels are combined to synthesise the final colourised image. Experimental results show that the proposed video colourisation method maintains the saturation of the reference image, while also maintaining edge sharpness. Compared with rival video colourisation methods based on traditional optical flow, the proposed method yields a higher peak signal-to-noise ratio (PSNR) and offers a shorter runtime.

Key words: colourisation; voxel flow; deep learning; neural network; optical flow

CHEN Yu, DING Youdong, YU Bing, XU Min . Video colourisation based on voxel flow[J]. Journal of Shanghai University, 2021 , 27(1) : 18 -27 . DOI: 10.12066/j.issn.1007-2861.2119

| [1] | Levin A, Lischinski D, Weiss Y. Colorization using optimization[J]. ACM Transactions on Graphics, 2004,23(3):689-694. |

| [2] | Lucas B D, Kanade T. An iterative image registration technique with an application to stereo vision[C] // 7th International Joint Conference on Artificial Intelligence (IJCAI). 1981: 674-679. |

| [3] | Irony R, Cohen-Or D, Lischinski D. Colorization by example[C] // Proceedings of the 16th Eruographics Conference on Rendering Techniques. 2005: 201-210. |

| [4] | Zhen Z, Yan G, Li Z M. An automatic image, video colorization algorithm based on pattern continuity[C] // International Conference on Audio, Language, Image Processing. 2012: 531-536. |

| [5] | Lang M, Wang O, Aydin T, et al. Practical temporal consistency for image-based graphics applications[J]. ACM Transactions on Graphics, 2012,31:34-42. |

| [6] | Yatziv L, Sapiro G. Fast image and video colorization using chrominance blending[J]. IEEE Transactions on Image Processing, 2006,15(5):1120-1129. |

| [7] | Sheng B, Sun H, Chen S, et al. Colorization using the rotation-invariant feature space[J]. IEEE Computer Graphics Applications, 2011,31(2):24-35. |

| [8] | Manjunath B S, Ma W Y. Texture features for browsing, retrieval of image data[J]. IEEE Trans Pattern Anal Machine Intelligence, 1996,18(8):837-842 |

| [9] | Heu J H, Hyun D Y, Kim C S, et al. Image, video colorization based on prioritized source propagation[C] // IEEE International Conference on Image Processing. 2009: 465-468. |

| [10] | Paul S, Bhattacharya S, Gupta S. Spatiotemporal colorization of video using 3D steerable pyramids[J]. IEEE Transactions on Circuits, Systems for Video Technology, 2017,27(8):1605-1619. |

| [11] | Welsh T, Ashikhmin M, Mueller K. Transferring color to greyscale images[J]. ACM Trans Graphics, 2002,21(3):277-280. |

| [12] | Sykora D, Burianek J, Zara J. Unsupervised colorization of black-and-white cartoons[C] // The 3rd International Symposium on Non-photorealistic Animation, Rendering. 2004: 121-127. |

| [13] | Pan Z, Dong Z, Zhang M. A new algorithm for adding color to video or animation clips[J]. Journal of WSCG, 2004,12(1/2/3):515-519. |

| [14] | Teng S, Shen Y, Zhao Z, et al. An interactive framework for video colorization[C] // IEEE International Conference on Image, Graphics. 2013: 89-94. |

| [15] | Xia S, Liu J, Fang Y, et al. Robust, automatic video colorization via multiframe reordering refinement[C] // IEEE International Conference on Image Processing. 2016: 4017-4021. |

| [16] | Pierre F, Aujol J F, Bugeau A, et al. Interactive video colorization within a variational framework[J]. SIAM Journal on Imaging Sciences, 2017,10(4):2293-2325. |

| [17] | Jampani V, Gadde R, Gehler P V. Video propagation networks[C] // IEEE Conference on Computer Vision, Pattern Recognition. 2017: 3154-3164. |

| [18] | Liu S, Zhong G, de Mello S, et al. Switchable temporal propagation network[C] // 15th European Conference on Computer Vision. 2018: 89-104. |

| [19] | Meyer S, Cornillère V, Djelouah A, et al. Deep video color propagation [EB/OL]. (2018-08-09) [2019-01-11]. https://arxiv.org/abs/1808.03232. |

| [20] | Lefkimmiatis S. Universal denoising networks: a novel CNN architecture for image denoising[C] // IEEE Conference on Computer Vision, Pattern Recognition. 2018: 3204-3213. |

| [21] | Yu J, Lin Z, Yang J, et al. Generative image inpainting with contextual attention[C] // IEEE Conference on Computer Vision, Pattern Recognition. 2018: 5505-5514. |

| [22] | Chen Y S, Wang Y C, Kao M H, et al. Deep photo enhancer: unpaired learning for image enhancement from photographs with GANs[C] // IEEE Conference on Computer Vision, Pattern Recognition. 2018: 6306-6314. |

| [23] | Niklaus S, Mai L, Liu F. Video frame interpolation via adaptive separable convolution[C] // IEEE International Conference on Computer Vision. 2017: 261-270. |

| [24] | Liu Z, Yeh R A, Tang X, et al. Video frame synconfproc using deep voxel flow[C] // IEEE International Conference on Computer Vision. 2017: 4473-4481. |

| [25] | He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C] // IEEE Conference on Computer Vision, Pattern Recognition. 2016: 770-778. |

| [26] | He K, Zhang X, Ren S, et al. Delving deep into rectifiers: surpassing human-level performance on imagenet classification[C] // IEEE International Conference on Computer Vision. 2015: 1026-1034. |

| [27] | Long G, Kneip L, Alvarez J M, et al. Learning image matching by simply watching video[C] // European Conference on Computer Vision. 2016: 434-450. |

| [28] | Perazzi F, Pont-Tuset J, McWilliams B, et al. A benchmark dataset, evaluation methodology for video object segmentation[C] // IEEE Conference on Computer Vision, Pattern Recognition. 2016: 724-732. |

| [29] | Pont-Tuset J, Perazzi F, Caelles S, et al. The 2017 davis challenge on video object segmentation [EB/OL]. (2018-03-01) [2019-01-11]. https://arxiv.org/abs/1704.00675. |

| [30] | Su S, Delbracio M, Wang J, et al. Deep video deblurring for hand-held cameras[C] // IEEE Conference on Computer Vision, Pattern Recognition. 2017: 237-246. |

| [31] | Kingma D P, Ba J. Adam: a method for stochastic optimization [EB/OL]. (2017-01-30) [2019-01-11]. https://arxiv.org/abs/1412.6980v9. |

| [32] | Soomro K, Zamir A R, Shah M. UCF101: a dataset of 101 human actions classes from videos in the wild [EB/OL]. (2012-12-03) [2019-01-11]. https://arxiv.org/abs/1212.0402. |

| [33] | Li Y, Liu M Y, Li X, et al. A closed-form solution to photorealistic image stylization [EB/OL]. (2018-02-19) [2019-01-11]. https://arxiv.org/abs/1802.06474. |

| [34] | Zhang R, Zhu J Y, Isola P, et al. Real-time user-guided image colorization with learned deep priors[J]. ACM Transactions on Graphics, 2017,36(4):1-11. |

/

| 〈 |

|

〉 |